Back to Business: AI, and the Future of Attribution

When simulation outpaces IP law, identity must carry the weight of attribution—and VISTA shows how to weave the threads back in.

If you’ve ever stared at an AI-generated image and wondered, "Who actually owns this?"—you’re not alone. The aesthetics may be novel, but the infrastructure behind them is hollow.

Hollow, not in terms of compute, but in terms of epistemic accountability: Who created this? What data was used? Was consent given? Can it be traced back to anything real? These aren’t legal questions alone—they’re architectural. And right now, we’re building cathedrals on sand.

Most organisations especially those exploring generative AI are operating without the structural safeguards that traditionally anchor authorship and ownership. And in the world of AI-generated art, this absence becomes more than just a technical oversight. It erodes the very idea of creative agency. If a model trained on scraped data outputs something aesthetically compelling, who is the creator? The developer? The prompt engineer? The anonymous artist whose work was absorbed by the dataset? The answer, currently, is a shrug—and that’s not sustainable.

Where We Are: Two Paths Ahead

This leaves us with two choices:

Abandon the use of large language models and generative systems altogether—decide that the epistemic cost is too great and the ethical ambiguity too high.

Or: we find a way. Not by pretending the simulation is real, but by building new scaffolds that hold it accountable. Not by asking the model to justify itself, but by designing infrastructures that make justification possible in the first place.

What we’re proposing is the second path—and it shares something important with the philosophy behind fractional ownership:

Fractional provenance: Multiple contributors—datasets, prompts, models, users—can be acknowledged and weighted.

Programmable attribution: Ownership need not be singular or static. It can be distributed, dynamic, and traceable.

Embedded rights: Consent, usage, and governance can be inscribed at the infrastructure layer—not enforced downstream.

If AI-generated content is the product of many inputs—datasets built on the backs of countless creators, decisions distributed across multiple agents and configurations—then the system that governs it must be capable of honoring that plurality.

What We're Missing: Lineage and Legibility

As I’ve argued elsewhere (Owning the Simulacrum, 2025), the collapse of authorship in generative systems isn’t just a legal blind spot—it’s an epistemic one.

Intellectual property law was built for a world where originality had a stable referent, and ownership could be cleanly assigned. But generative AI scrambles those foundations. It simulates novelty without origin, and in doing so, unravels the very logic that IP protections depend on.

Generative AI has smuggled simulation into the heart of knowledge work. These systems do not create; they recombine. They do not author; they mimic. And yet we treat their outputs—art, music, prose—as if they bear the weight of intention, provenance, and authorship. They don’t. Because we’ve designed them to be fluent, not faithful. They optimize for coherence, not lineage. And so we find ourselves at a strange juncture: the more these systems appear original, the harder it becomes to prove origin.

This is not just a wrinkle in copyright law—it’s the collapse of authorship itself. When AI simulates novelty without reference, we lose not only control over intellectual property, but over the very conditions that make IP meaningful. We lose the ability to say: this came from me. This belongs to us. This was made by someone, for someone, in some context that matters.

Take the recent case of the AI-generated Drake and The Weeknd song that went viral—"Heart on My Sleeve." The track, which convincingly mimicked the voices of both artists, was created using AI models trained on public data. It amassed millions of views before being taken down. Universal Music Group called for its removal, but no legal mechanism could definitively identify or stop the act of mimicry. The model had no memory, no authorship trail, and no way of honoring the rights of the original artists. The system was structurally incapable of respect.

In legal and regulatory frameworks, the burden of proof typically lies in attribution. But what happens when the systems generating content are incapable of retaining the chain of attribution? You get a content economy built on ungrounded claims. You get lawsuits without evidence, apologies without traceability, and a flood of media that can’t be audited. We are watching this unfold in real time across music, design, advertising, and journalism. And the response can’t just be moral panic. It has to be architectural.

What We're Building: VISTA as a Scaffold

That’s why I’m interested in identity—not as authentication, but as infrastructure. Because when we treat identity as an epistemic anchor, we don’t just authenticate an agent. We preserve the conditions for attribution, for ownership, for meaning. In a plural epistemic world—where knowledge is oral, communal, or intergenerational—that’s not a technicality. It’s the whole point.

Enter VISTA. A modular framework I’ve been building to bring verifiability back into AI agent infrastructure. You’ve probably seen agentic systems getting slotted into creative workflows—generating ads, music, game levels, visual art. But what’s missing isn’t flair—it’s traceability. VISTA doesn’t overhaul your system. It adds a scaffold. It has five distinct layers, but for now, let’s focus on one: Identity.

Figma as a Use Case: What Identity Could Unlock

Take Figma—a tool rapidly expanding into generative territory. With features like FigJam AI, GPT-based image generation, and Claude-powered design-to-code workflows, Figma is becoming more than a canvas. It’s a collaborative agentic system. But it lacks one thing: verifiable identity.

Imagine this specific use case: a user enters a high-level requirement—"design me a dashboard for a weather app"—and Figma’s generative UI feature responds with three design variations. Behind the scenes, these layouts are not created ex nihilo; they’re composed from design primitives, templates, and styling conventions originally crafted and contributed by Figma’s global community of designers. So whose work is it?

Right now, that lineage disappears. The output is flattened into a single, synthetic frame. No trace of whose layout logic was reused. No acknowledgment of whose color palette was incorporated. No record of how the model reassembled these pieces into the final design.

But with a decentralized identity layer powered by VISTA:

Each underlying component—grid system, color scheme, icon set—could retain cryptographic links to its original contributor.

The final composition would include a ledger of its epistemic inputs—human and machine.

Designers could opt-in to have their design elements used with traceable provenance, allowing for downstream attribution or even fractional compensation.

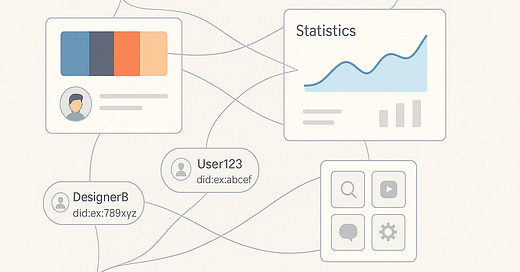

This diagram illustrates what the future of generative collaboration could look like when identity becomes infrastructure. At the center are various contributors—@DesignerA, DesignerB, User123, and even the generative system itself, Figma AI—each tagged with a decentralized identifier (DID). These identities are cryptographically bound to their creative inputs: color palettes, layout elements, user interfaces, even statistical insights. Rather than allowing Figma’s generative features to flatten all contributions into a synthetic output, the VISTA framework proposes a traceable design ecosystem—where each design primitive retains a link to its origin, whether human or AI-assisted. Statistics are no longer abstract; they reflect verifiable interactions. Tools are no longer generic; they are anchored to identity-aware provenance. In this world, a dashboard isn’t just generated—it’s reassembled transparently, with a ledger of epistemic inputs. The result is more than attribution. It’s a scaffolding for creative legitimacy, where ownership isn’t erased by automation but distributed and programmable by design.

In short: we move from opaque remixing to transparent reassembly.

This wouldn’t break the workflow. It would enhance it. Users would still get generative speed. Contributors would get recognition. And platforms like Figma would gain legitimacy—anchoring AI-generated outputs in a scaffold of authorship rather than a blur of automation.

That’s what VISTA makes possible. And it starts with one design decision: treat identity not as metadata, but as the foundation of meaning.

Learn more about VISTA here,